How to Migrate VMS to Containers Using Kubernetes

Virtual Machines (VMs) are software applications that provide a virtualised operating system and hardware platform. Containers, on the other hand, offer an isolated environment to run your application.

Kubernetes is an open-source container orchestration tool that makes it easier to manage how containers work together within a cluster of computers.

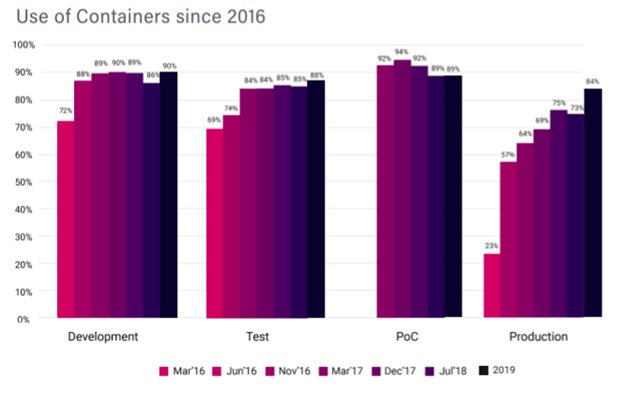

Containers are rapidly becoming the norm. In 2019, more than 84% of production projects from the Cloud Native Foundation used containers to deploy their applications.

Kubernetes also takes the lead in the forms of containers utilised. With over 109 container tools available in the market, 89% of respondents still prefer to use different forms of Kubernetes to deploy their productions.

This growth indicates that more companies are trusting container technology to deploy their production workloads.

(Image source: cnf.io)

Kubernetes containers offer a multitude of benefits such as flexibility, multi-cloud capacity, and it's an open-source service to boot. Such functionality makes Kubernetes the market leader and preferred choice for companies to deploy their applications.

Below, we discuss how you can migrate VMS to containers using Kubernetes. We have refined the migration approach below through successful conversion cycles that delivered immediate business improvements to Zsah’s clients.

Stage 1 – Create a Migration Plan

Create a migration roadmap that begins by moving the more stable applications followed by the complex ones that need a more mature container stack. There are three methods to transfer VMs to containers:

- Lift and Shift: involves moving all parts of an application then running it on a single Kubernetes container without altering the application. Though the lift and shift method is more straightforward, it limits the application's configurations and leaves no room for refactoring.

- Refactoring: involves making menial changes to an application's code without altering its functionality and features. Refactoring allows companies to tap into container benefits and improve the application's scalability.

- Architecting: this method redesigns the application's architecture, moving the company from objects to microservices. This migration method transforms an application from a unified platform to a complex combination of services working together.

In a snapshot, moving your VMs to Kubernetes containers involves:

- First, determine the clusters for different development environments for production testing and development.

- Apply the 12-factor principles while migrating VMs to containers, i.e., codebase, dependencies, configuration, backing services, build, processes, port binding, concurrency, disposability, dev parity, logs, and admin processes

- Utilise K8 secret for building credentials

- Map components between VMs and containers

- Containerise the applications

- Develop Kubernetes deployment files and deploy docker images to the containers

Stage 2 – Knowledge Transfer

Knowledge transfer includes understanding how the old system was set up, how it works, how data is stored and processed, how components interact, etc. It's also important to see how they are doing this (the VMS).

We recommend that you put together a report of all findings from your study. Send out any reports or updates as appropriate to keep project stakeholders informed about progress on the migration process.

Once you have gathered enough information and feedback from stakeholders, create an infrastructure plan to migrate containers to Kubernetes clusters in preparation for the next stage of development. Build container images and deployment configurations based on what has been learned in previous steps far without breaking anything.

There are two main phases of the migration process: migrating data and applications. Kubernetes provides a way to define how to deploy your containers, how they interact with one another, and how many resources each container needs.

This is where the zsah API comes in handy for users who know what their application's dependencies on other services may look like and want to prepare accordingly ahead of time. It also includes how many instances or replicas you would like to deploy at any given point in time.

You can also deploy the API on Kubernetes containers to direct traffic to the new container service. Ensure specific VMS-level configurations (DNS servers, security groups rules) are translated into Kubernetes equivalents.

The first step in transferring knowledge is developing bespoke container images. This involves creating purpose-built containers with dependencies and including a "release" function within the code.

Once you create the bespoke container images, store the assets in an s3 compliant storage system. This system is part of the Kubernetes cluster and allows you to scale your application.

Stage 2 – Infrastructure Build and Migration Phase

VM-based productions should be refactored to make room for containerisation. Refactoring allows you to restructure and divide applications into services with minimal changes to the code. The infrastructure build phase involves creating an initial Kubernetes cluster by utilising K3s.

Create a separate repository to handle code for creating "base container" images. The base images repository consists of docker files (blueprints) for the containers and a gitlab.yml CI/CD file for automating, tagging, and pushing container images to the Gitlab registry.

After creating the base container images, compose "application-specific images" by dropping additional files such as docker file, Nginx config, supervisor process handing config to their existing repository.

Once this step is complete, you can move on to the data migration phase. Again, Kubernetes provides a few options for handling migration - depending on how much control is needed in container deployment and how they interact with one another on an infrastructure level.

The easiest way is by using Stateful Sets of Pods which automatically handles saving state between container migrations (e.g., high availability). However, in cases where users need more control, Pod Presets can be used instead - these allow you to define your deployment configuration, including how many replicas should exist at any given point in time.

Next, develop a configurable Helm chart by working on the Kubernetes script and configurations. This development makes deployment easier and faster to deploy configurations.

Automate the deployment of new application releases by creating an infrastructure-related repository to house the helm chart, configuration files, and GitLab yml files.

Use Kubernetes Ingress (Traefik) to route traffic, TLS termination (HTTPS), and MetalLB for load balancing services between workloads for a like-for-like migration.

Store your configurations and credentials as encrypted Kubernetes secret in the K8s cluster.

Stage 3 – Test for Use Cases

Testing allows you to create more enhanced delivery pipelines that deliver services on time. Use delivery tools such as GitLab, Jenkins, GoCD to run your applications and perform integration tests on the services you broke down from the VMs. Testing the container components prevents runtime issues and reduces service timeout when performing tasks.

Future phases

Migrating VMs to Kubernetes containers is only the first step. Training your team on Continuous Delivery workflows with skills to manage applications and infrastructure in the context of Kubernetes containers.

The final phase also includes future steps such as monitoring and scaling needs that may come up due to new challenges or changes in the application requirements themselves. Make sure you follow best practices for how to monitor the cluster.

Kubernetes provides a few options, such as Heapster and Prometheus, used depending on the monitoring needs. In addition to this, it's essential that you also scale your containers according to how many resources they require - Kubernetes will handle these tasks automatically when needed.

Advantages of Migrating VMs to Kubernetes Containers

Migrating your VMs to containers has several operational, development, and infrastructure benefits.

Infrastructure Efficiency

Migrating your applications to containers leads to better utilisation of computing resources. By leveraging auto-scaling and auto bin-packing, Kubernetes places containers within nodes and only scales when necessary.

Zsah tailors the Kubernetes migration to your organisation’s current needs and future aspirations to maximise your newly acquired containers fully.

Our managed-cloud solution provides a holistic DevOps experience that unlocks efficiency, accelerates innovation and extracts maximum value from your Kubernetes investment.

Additionally, containers share operating system resources and don't require individual CPUs. This capability allows for more workloads and lesser memory usage.

Increased efficiency also reduces the cost of ownership. Efficiency means that you'll run more workloads and pay less for container components.

Improved Productivity

Containers provide various tools to empower your teams for faster development and deployment. Features such as Kubernetes self-healing reduce ticketing and incident handling. There's also unified management of workloads, making it easier to manage your hybrid landscapes.

Developers don't have to manage kernel security upgrades because the system controls the OS, allowing your developers to focus on critical work.

Portability

Containers are flexible and developed and can build either on desktop, cloud servers, on-premise servers, or hybrid landscapes.

Conclusion

Migrating VMSs is essential in optimising infrastructure and services from legacy systems with new technologies like Kubernetes. The steps involved vary based on how much control or knowledge of the old system there is. Still, ultimately, it comes down to migrating data first (if applicable) then transferring applications onto Kubernetes clusters while ensuring everything stays up-to-date with how containers interact and how they are deployed.